Hidden markov models. Deleted interpolation. Linear and logistic regression: Maximum Entropy models; logit and logistic function; relationship to sigmoid and softmax. Transformation-based POS tagging. Handling out-of-vocabulary words.

Home Page and Blog of the Multilingual NLP course @ Sapienza University of Rome

Tuesday, March 31, 2020

Thursday, March 26, 2020

Tuesday, March 24, 2020

Lecture 8 (24/03/2020, Google meet, 2 hrs): perplexity, smoothing, interpolation

Chain rule and n-gram estimation. Perplexity and its close relationship with entropy. Smoothing and interpolation.

Thursday, March 19, 2020

Lecture 7 (19/03/2020, Google meet, 3 hrs): word2vec in PyTorch + language modeling

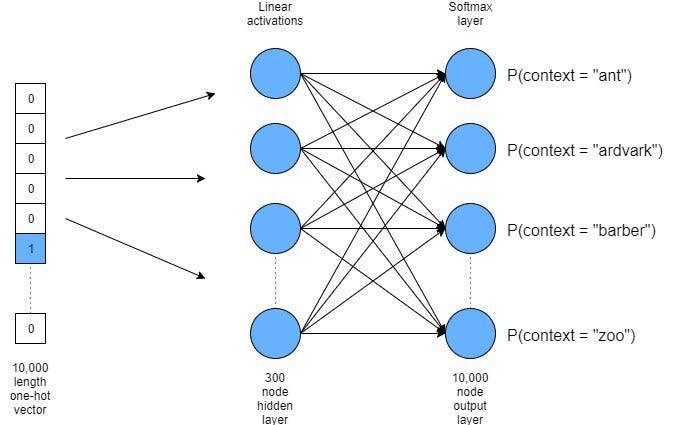

Word2vec in PyTorch. We introduced N-gram models (unigrams, bigrams, trigrams), together with their probability modeling and issues

Tuesday, March 17, 2020

Friday, March 13, 2020

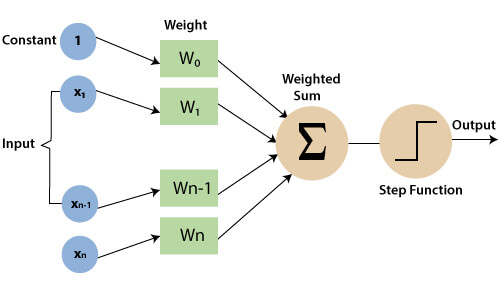

Lecture 5 (12/03/2020, Google meet, 3 hours): more on the Perceptron (notebook) and FF networks

More on the perceptron. The Perceptron in PyTorch. Datasets, training, gradient descent.

Tuesday, March 10, 2020

Tuesday, March 3, 2020

Subscribe to:

Posts (Atom)