Introduction to text summarization and evaluation metrics (BLEU, ROUGE, BERTScore, alternatives). Open issues in NLP: superhuman performance in current benchmarks, stochastic parrots, evaluation of text quality. Thesis topics and more. Closing.

Home Page and Blog of the Multilingual NLP course @ Sapienza University of Rome

Introduction to text summarization and evaluation metrics (BLEU, ROUGE, BERTScore, alternatives). Open issues in NLP: superhuman performance in current benchmarks, stochastic parrots, evaluation of text quality. Thesis topics and more. Closing.

Foundations of sequence-to-sequence models and their use within Huggingface.

Introduction to machine translation (MT) and history of MT. Overview of statistical MT. Beam search for decoding. Introduction to neural machine translation: the encoder-decoder

neural architecture. The BLEU

evaluation score. Performances and recent improvements. Neural MT: the encoder-decoder architecture; Attention in NMT.

More on Semantic Role Labeling. Semantic Parsing: task, motivation and applications, Abstract Meaning Representation (AMR) and BabelNet Meaning Representation (BMR), Natural Language Generation from semantic parses

Semantic roles. Frame resources: PropBank, FrameNet, VerbAtlas. Semantic Role Labeling (SRL). Multilingual SRL. Cross-inventory approaches to SRL. Topics for thesis or excellence path.

Word Sense Disambiguation (WSD): introduction to the task. Purely data-driven, and neuro-symbolic approaches. WSD cast as sense comprehension. Issues. Semantic Role Labeling: introduction to the task. Inventories. Neural approaches. Issues.

Assignment of homework2: Word Sense Disambiguation. Introduction to Word Sense Disambiguation. First introduction to explicit and latent sense embeddings. SensEmbed.

More on the Transformer architecture. Pre-trained language models: BERT, GPT, RoBERTa, XLM. Introduction to lexical semantics: meaning representations, WordNet, BabelNet. Neurosymbolic NLP.

Neural language modeling. Context2vec. Neural language models with BiLSTMs. Contextualized word representations. Introduction to the attention. Introduction to the Transformer architecture.

Introduction to lexical semantics. Lexicon, lemmas and word forms. Introduction to the notion of concepts, the triangle of meaning, concepts vs. named entities. Word senses: monosemy vs. polysemy. Key tasks for Natural Language Understanding: Word Sense Disambiguation (within lexical semantics), Semantic Role Labeling and Semantic Parsing (sentence-level).

What is a language model? N-gram models (unigrams, bigrams, trigrams), together with their probability modeling and issues. Chain rule and n-gram estimation.

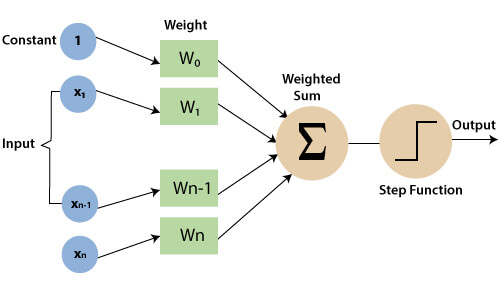

Introduction to Supervised, Unsupervised & Reinforcement Learning. The Supervised Learning framework. From real to computational: features extraction and features vectors. Feature Engineering and inferred features. PyTorch vs Tensorflow. The perceptron model. What is Deep Learning, training weights and Backpropagation.

Introduction to classification in NLP. The task of Sentiment Analysis. Probabilistic classification. Logistic Regression and its use for classification. Explicit vs. implicit features. The cross-entropy loss function.

Introduction to NLP in Rome. Introduction to Natural Language Processing: understanding and generation. What is NLP? The Turing Test, criticisms and alternatives. Tasks in NLP and its importance (with examples). Key areas.

We gave an introduction to the course and the field it is focused on, i.e., Natural Language Processing and its challenges.

Welcome to the Sapienza NLP course blog 2023! The course is held at DIAG! Cool things about to happen:

IMPORTANT: The current lecture model is in-person attendance. Please get access to the Facebook group by signing up via the link below.

IMPORTANT (bis): Note that the course has been renamed into Multilingual Natural Language Processing (if you have NLP in your plan and want to attend my course, please contact me at [surname]@diag.uniroma1.it).