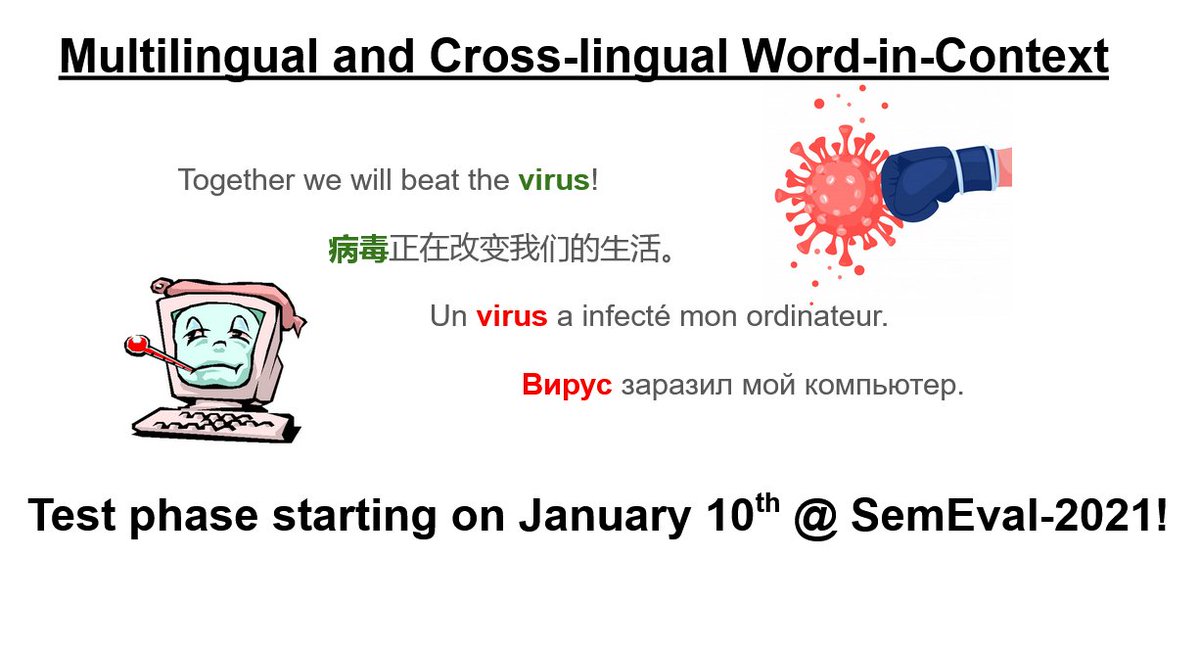

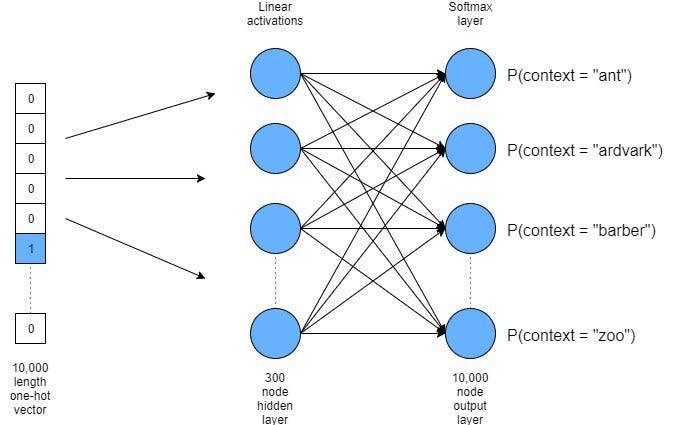

Introduction to multilingual Semantic Parsing. Abstract Meaning Represenatation. Introduction to machine translation (MT) and history of MT. Overview of statistical MT. Beam search for decoding. Introduction to neural machine translation: the encoder-decoder

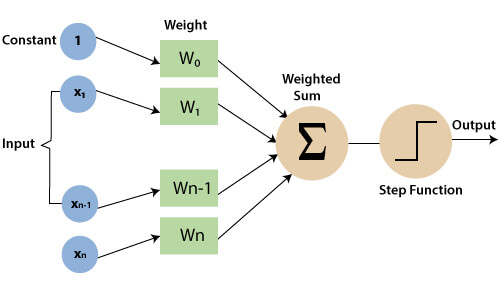

neural architecture. The BLEU

evaluation score. Performances and recent improvements. Neural MT: the encoder-decoder architecture; BART; advantages; results. Attention in NMT. Additional uses of the encoder-decoder architecture: Generationary.

Closing of the

course!