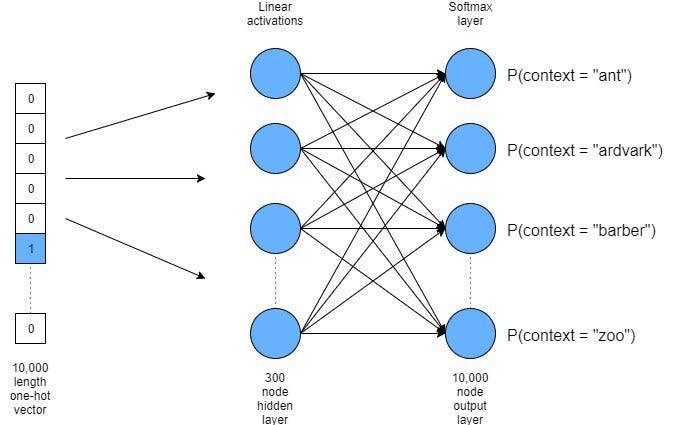

More on Word2Vec and word embeddings: hierarchical softmax; negative sampling. GloVe.

Recurrent Neural Networks. Gated architectures, Long-Short Term Memory networks (LSTMs). Bidirectional LSTMs and stacked LSTMs. Character embeddings. Introduction to PyTorch Lightning

Wednesday, March 24, 2021

Friday, March 19, 2021

Tuesday, March 16, 2021

Lecture 7 (15/03/2021, 3 hours): probabilistic language modeling

Word2vec in PyTorch. We introduced N-gram models (unigrams, bigrams, trigrams), together with their probability modeling and issues

Chain rule and n-gram estimation. Perplexity and its close relationship with entropy. Smoothing and interpolation.

Thursday, March 11, 2021

Tuesday, March 9, 2021

Saturday, March 6, 2021

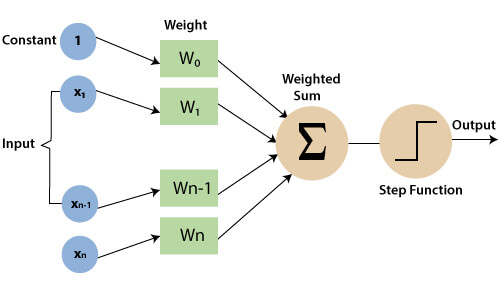

Lecture 1 (22/02/2021, 3 hours): introduction to NLP

We gave an introduction to the course and the field it is focused on, i.e., Natural Language Processing and its challenges.

Subscribe to:

Posts (Atom)